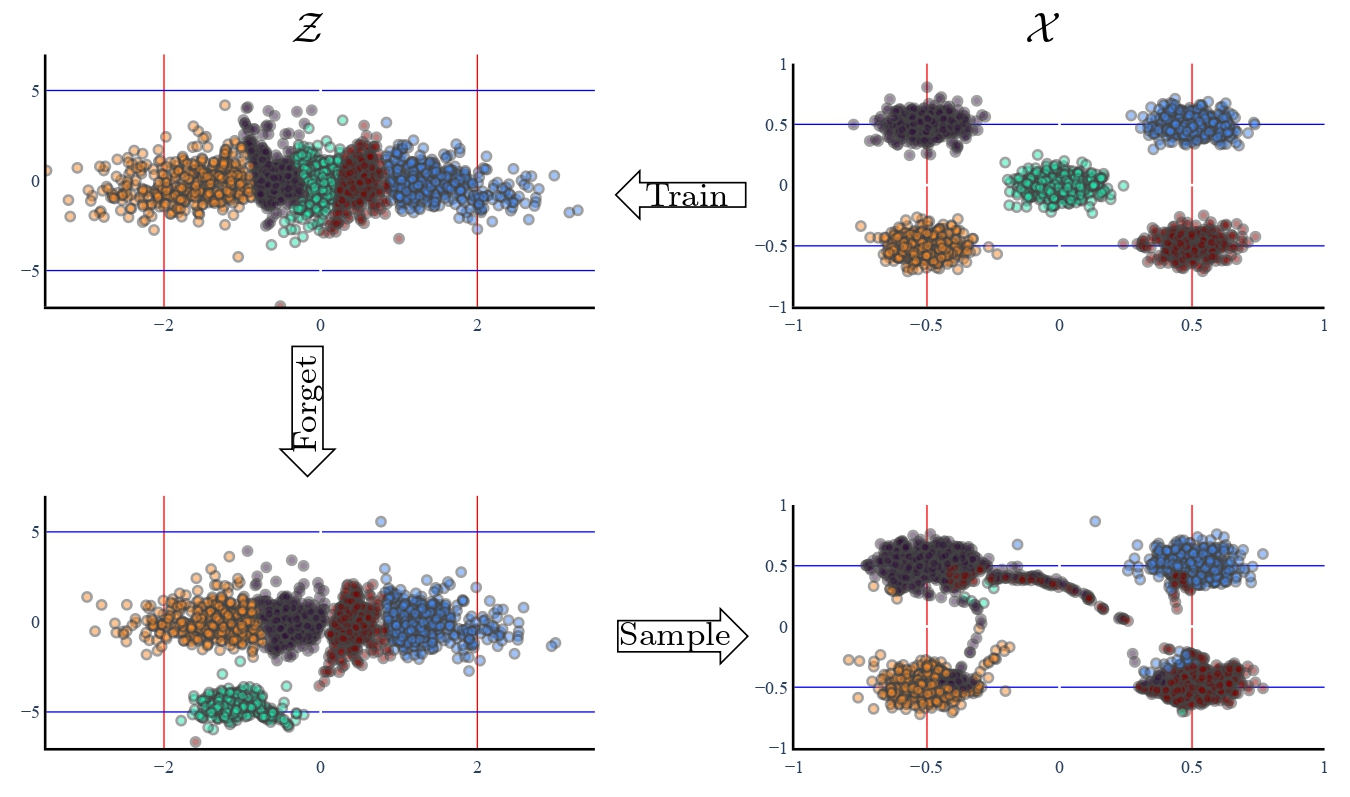

We propose an algorithm for taming Normalizing Flow models — changing the probability that the model will produce a specific image or image category.

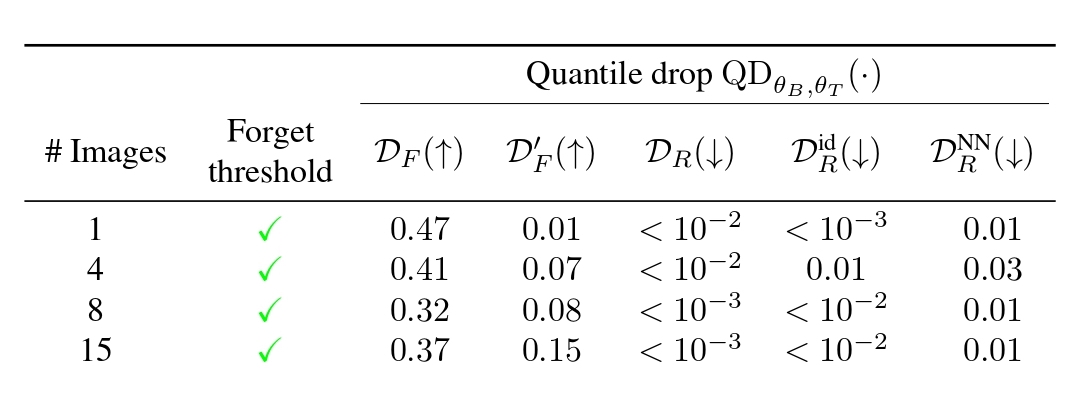

We focus on Normalizing Flows because they can calculate the exact generation probability likelihood for a given image. We demonstrate taming using models that generate human faces, a subdomain with many interesting privacy and bias considerations.

Our method can be used in the context of privacy, e.g., removing a specific person from the output of a model, and also in the context of debiasing by forcing a model to output specific image categories according to a given target distribution.

Taming is achieved with a fast fine-tuning process without retraining the model from scratch, achieving the goal in a matter of minutes. We evaluate our method qualitatively and quantitatively, showing that the generation quality remains intact, while the desired changes are applied.

@InProceedings{Malnick_2024_WACV,

author = {Malnick, Shimon and Avidan, Shai and Fried, Ohad},

title={Taming Normalizing Flows},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month= {January},

year={2024},

pages={4644-4654}

}